Facial recognition software has a gender problem

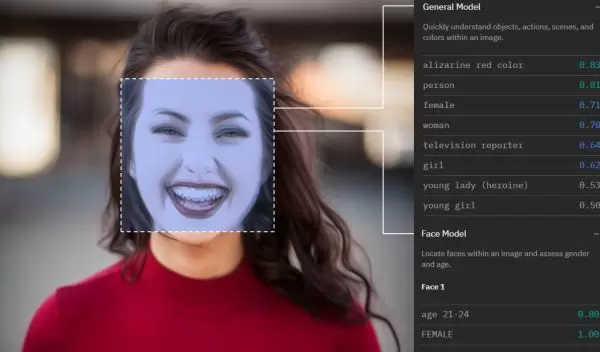

With a brief glance, facial recognition software can categorize gender with remarkable accuracy. But if that face belongs to a transgender person, such systems get it wrong more than one third of the time, according to new CU Boulder research.

"We found that facial analysis services performed consistently worse on transgender individuals, and were universally unable to classify non-binary genders," said lead author Morgan Klaus Scheuerman. "While there are many different types of people out there, these systems have an extremely limited view of what gender looks like."

The study comes at a time when facial analysis technologies -- which use hidden cameras to assess and characterize certain features about an individual -- are becoming increasingly prevalent, embedded in everything from smartphone dating apps and digital kiosks at malls to airport security and law enforcement surveillance systems.

Previous research suggests they tend to be most accurate when assessing the gender of white men but misidentify women of color as much as one-third of the time.

"We knew there were inherent biases in these systems around race and ethnicity and we suspected there would also be problems around gender," said senior author Jed Brubaker. "We set out to test this in the real world. These systems don't know any other language but male or female, so for many gender identities it is not possible for them to be correct."

The study suggests that such services identify gender based on outdated stereotypes. When Scheuerman, a male with long hair, submitted his picture, half categorized him as female. "These systems run the risk of reinforcing stereotypes of what you should look like if you want to be recognized as a man or a woman," said Scheuerman. "That impacts everyone."

The research was funded by NSF's Directorate for Computer & Information Science & Engineering.